Dataset Properties

When adding a dataset onto Ambience Dataset module, there are several tabs:

- Properties

- Filter (MongoDB dataset only)

- Aggregation Pipeline

- Export Pipeline

- Schema

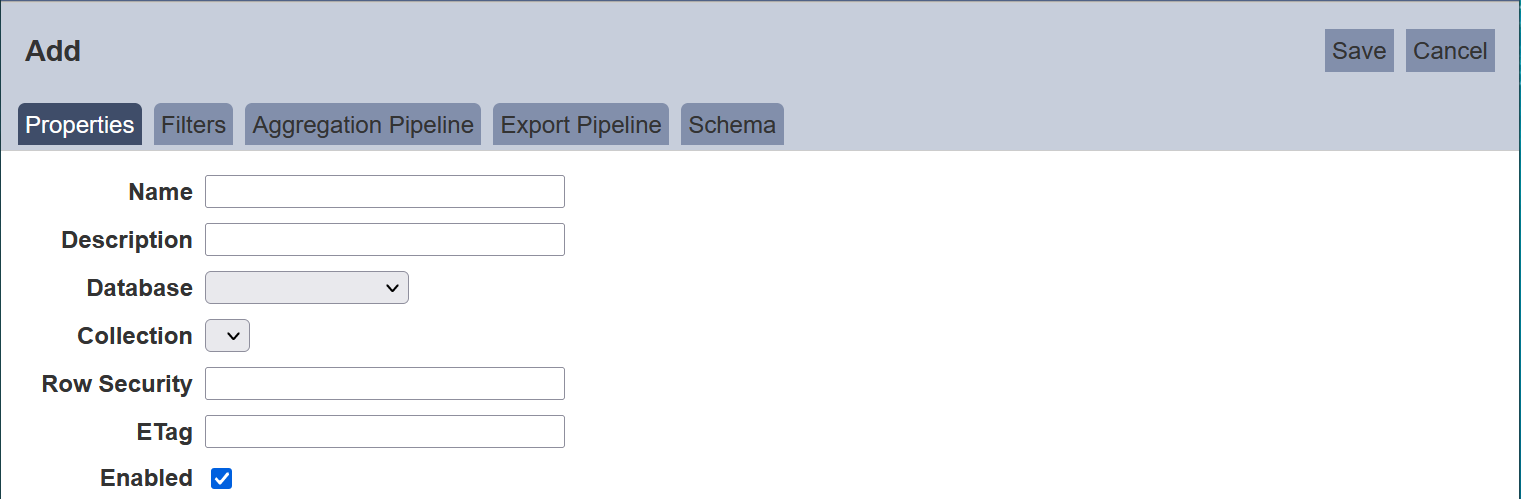

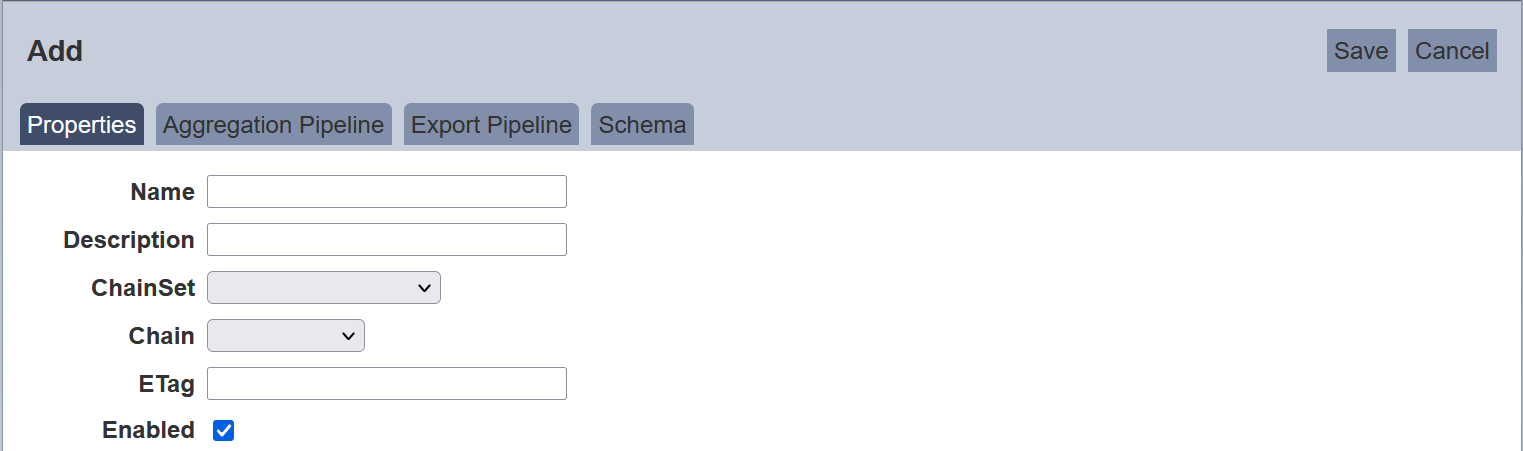

Screenshots below shows the Properties setting for both MongoDB dataset and ETL dataset respectively.

Properties Tab

The following is the basic setup for a new dataset. The interface looks different between MongoDB dataset and ETL dataset.

| Field | Description |

|---|---|

| Name | A unique dataset name. (Required) |

| Description | Additional text to describe the dataset. (Optional) |

| Database | The database that holds the collection used as the source of records for this dataset. Choose from the list of databases which is based on the MongoDB database setting in the Ambience application.conf file. (Required for MongoDB datasets) |

| Collection | The collection (table) used as the source of records for this dataset. Choose from the list of collections. (Required for MongoDB datasets) |

| Row Security | Checks the credentials of user to determine which rows of data are to be displayed for that user. (Optional) If undefined, all data is displayed. If user does not have the credentials, no data wil lbe displayed. |

| ChainSet | The ETLs (chainset) created in ETL module. Choose one from the chainset. (Required for ETL datasets) |

| Chain | The chains with Dataset Endpoint step in the selected ETL used as the source of records. Choose from the list. (Required for ETL datasets) |

| ETag | Use to reflect the dataset has changed since its last datetime. By default, it is blank when a dataset is created. (Optional) |

| Enabled | Status of the dataset. It is “Enabled” by default. (Required) |

Example: Using Row Security

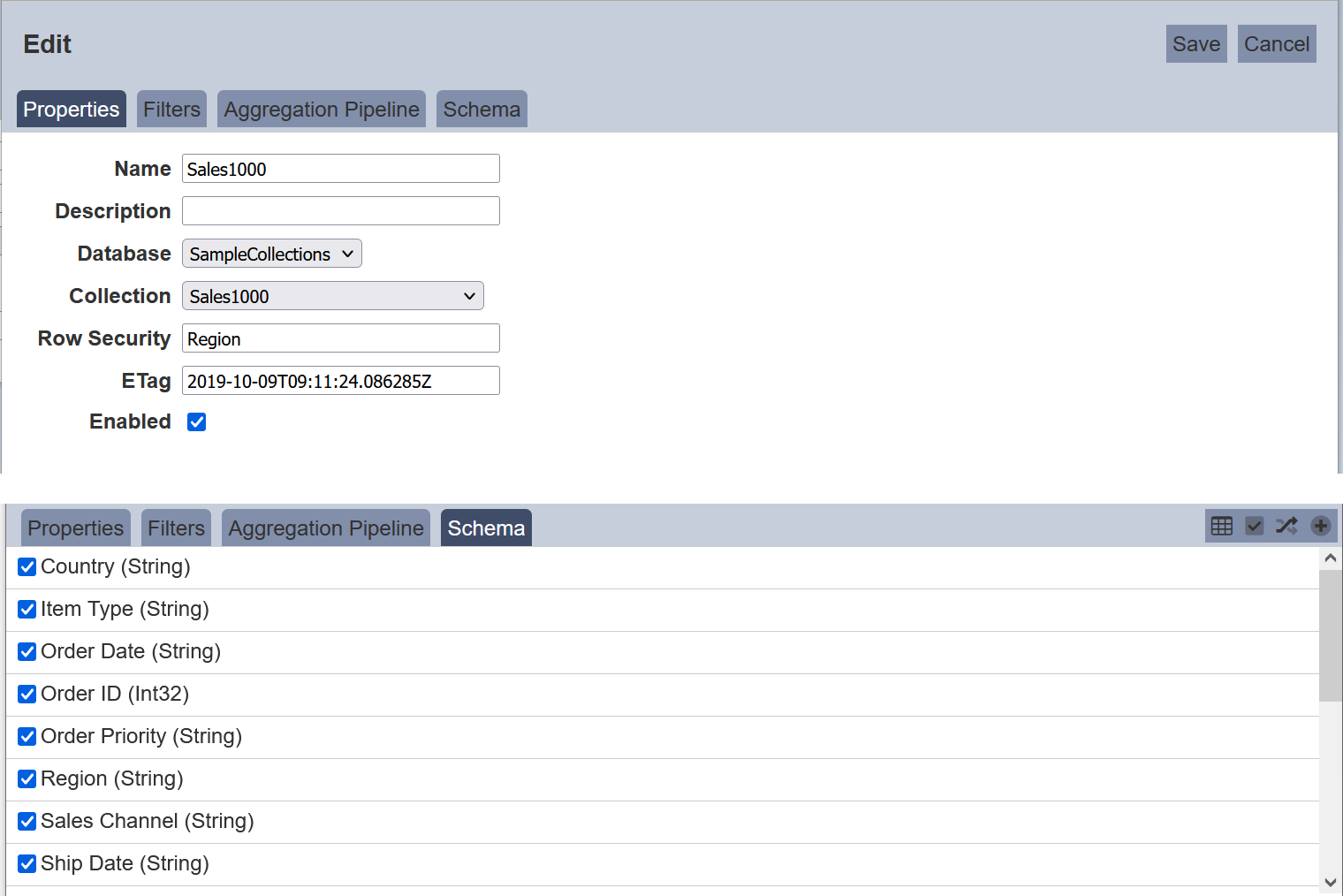

The “Row Security” feature allows access rights control on the dataset. It applies to MongoDB dataset only. If no field is defined, all data will be displayed in the views in the dashboard that uses the dataset. If a field is defined, users with the correct credentials will view the appropriate data in the views in the dashboard.

To do so, in the Datasets page, click on the ![]() “Edit” icon under the “Actions” column corresponding the desired dataset. in the “Properties” tab, key in the field that you wish to control. The field name must be part of the schema. In the example below, the field “Region” is selected as the control.

“Edit” icon under the “Actions” column corresponding the desired dataset. in the “Properties” tab, key in the field that you wish to control. The field name must be part of the schema. In the example below, the field “Region” is selected as the control.

Click on the “Save” button to save the change. The options for the field “Region” now can be used as values to check against users credential values.

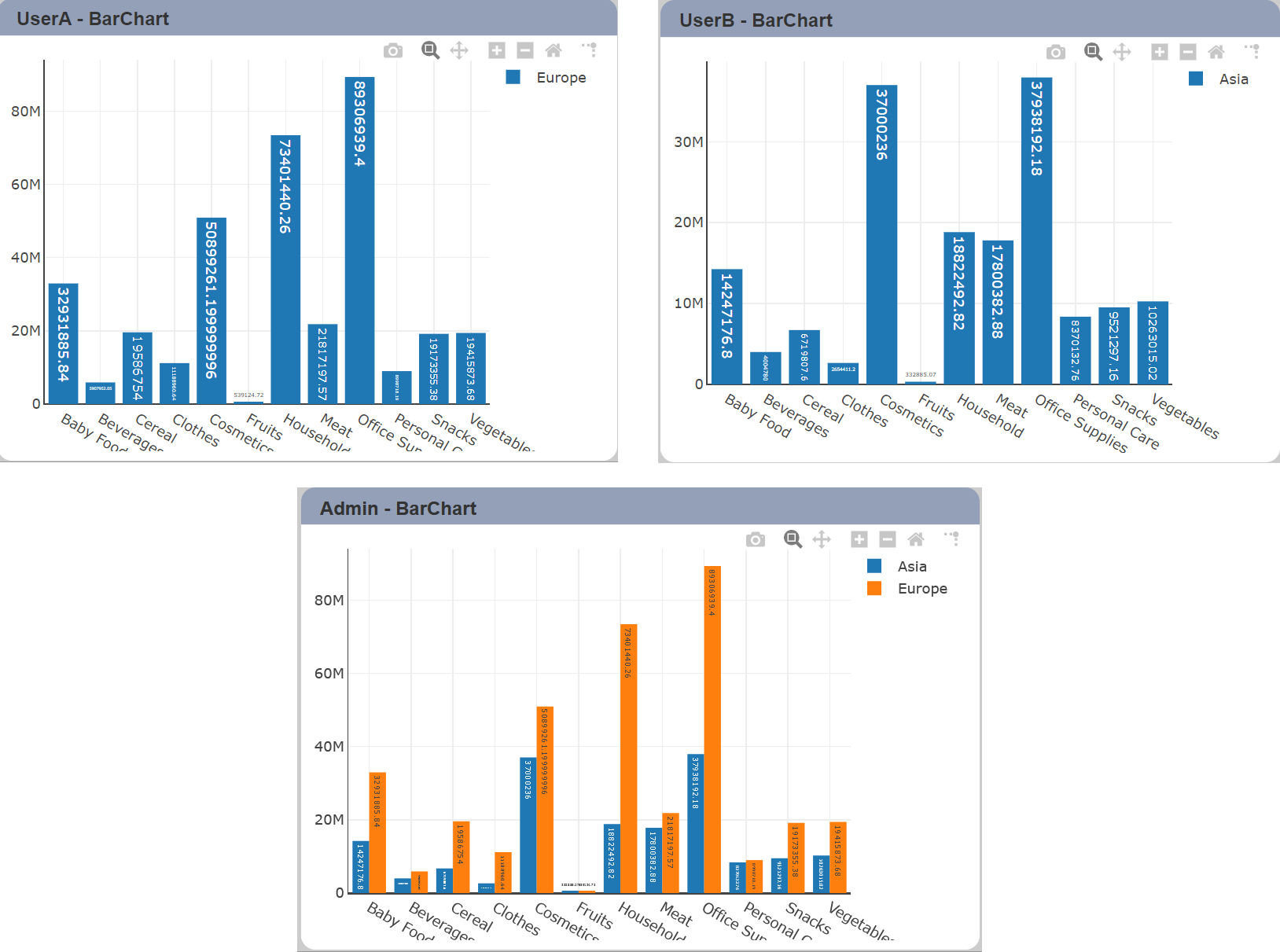

In the Roles page, create roles using the options for “Region”. In this example, UserA is granted the role “Europe” while UserB is granted the role “Asia”. Admin is granted both “Asia” and “Europe” roles. UserA and User B will only see data for their own region, which is Asia and Europe respectively. Admin will see 2 sets of data, from Asia and Europe.

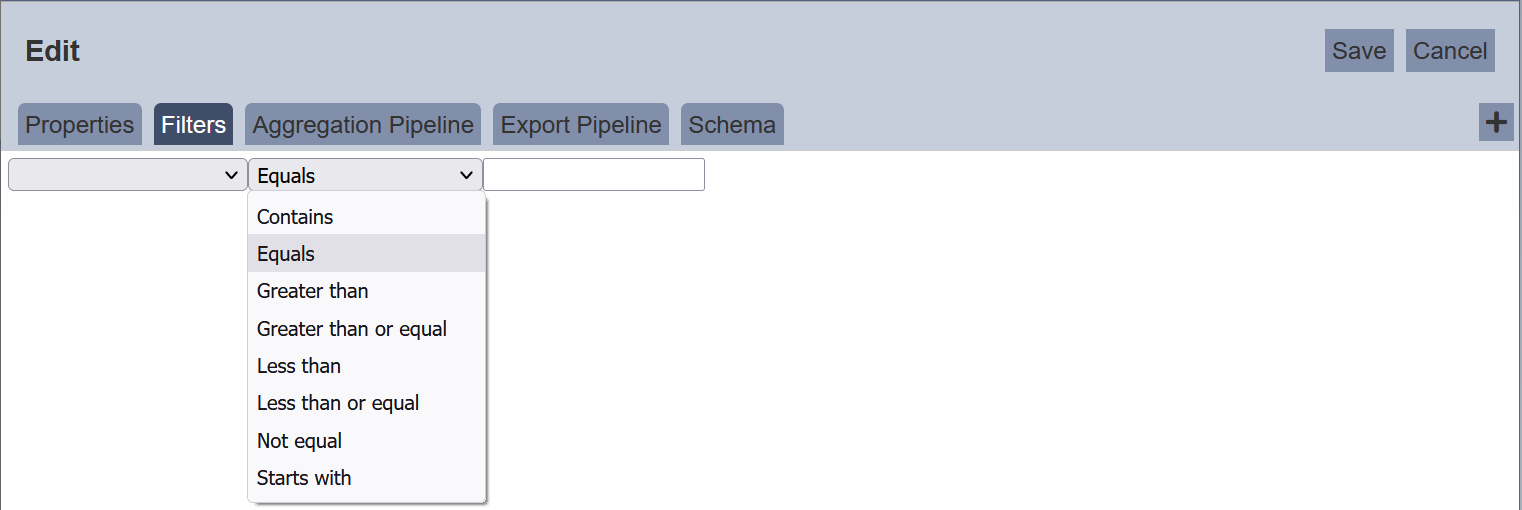

Filter Tab (MongoDB Dataset only)

Ambience Datasets module supports comparison query operators such as equal, greater than or equal, etc. The filter is an optional step, where one of the operators can be selected from the dropdown selection to perform a quick filter on a field in the collection. The filter is applied to the collection data before aggregation.

Aggregation Pipeline Tab

Aggregation pipeline processes or transforms documents (records) into an aggregated result, for example, a MongoDB syntax below is used to unwind an array of field from documents,

db.inventory.aggregate( [ { $unwind : "$sizes" } ] )

However, in Dataset Management, you write the aggregation pipeline with JSON syntax as below to perform the same operation,

[ { "$unwind" : "$sizes" } ]

Aggregation pipeline validates the JSON syntax and shows the test results on the right-side panel. The benefits of aggregation pipeline are,

- All data processing is done inside MongoDB including those datasets which use the ETL chainsets.

- Aggregation pipeline operations have an optimization phase which attempts to reshape the aggregation pipeline for improved performance.

- Network traffic to the client is smaller.

Aggregation pipeline is optional for both MongoDB dataset and ETL dataset. You can click on the “Test Aggregation” button even without writing aggregation to show the result. More examples as below,

Sample 1: Deconstruct an array field fom the input documents to a document for each element using $unwind.

Consider a collection with following document,

{ "_id" : 1, "item" : "ABC1", sizes: [ "S", "M", "L"] }

The following aggregation uses $unwind to output a document for each element in the sizes array.

[{ "$unwind" : "$sizes" }]

Each document is identical except for the value of sizes, hence, it returns three lines.

{ "_id" : 1, "item" : "ABC1", "sizes" : "S" }

{ "_id" : 1, "item" : "ABC1", "sizes" : "M" }

{ "_id" : 1, "item" : "ABC1", "sizes" : "L" }

Sample 2: Use of $project and $group to group a field not equal to 1 and get a count.

$project takes a document that specifies the inclusion of fields, the suppression of the "_id“ field, the addition of new fields and the resetting of the values of existing fields. For example, $project takes ”patient_name" field in its output documents. Then, selects documents to process using $match operator, in this case not equal to 1, and returns the results in the $group operator to compute a count of documents.

[

{

"$project" : {

"patient name" : 1

}

},

{

"$group" : {

"_id" : "$patient name",

"count" : {

"$sum" : 1

}

}

},

{

"$match" : {

"count" : {

"$ne" : 1

}

}

}

]

Sample 3: Using of $elx prefix.

The “$elx” prefix is used in aggregation pipeline where operators need to be used but are not recognised in MongoDB. These prefixed operators are are handled by the dataset pre-processor and removed before passing to MongoDB for evaluation.

The below code

[

{

"$elx-parameter" : {

"name" : "ABC",

"value" : {"anything" : true}

}

},

{

"$match" : {

"$elx-lookup" : "ABC"

}

}

]

will become

[

{

"$match" : {"anything" : true}

}

]

when passed to MongoDB. If there are several parameters with the same name, the first parameter will be chosen.

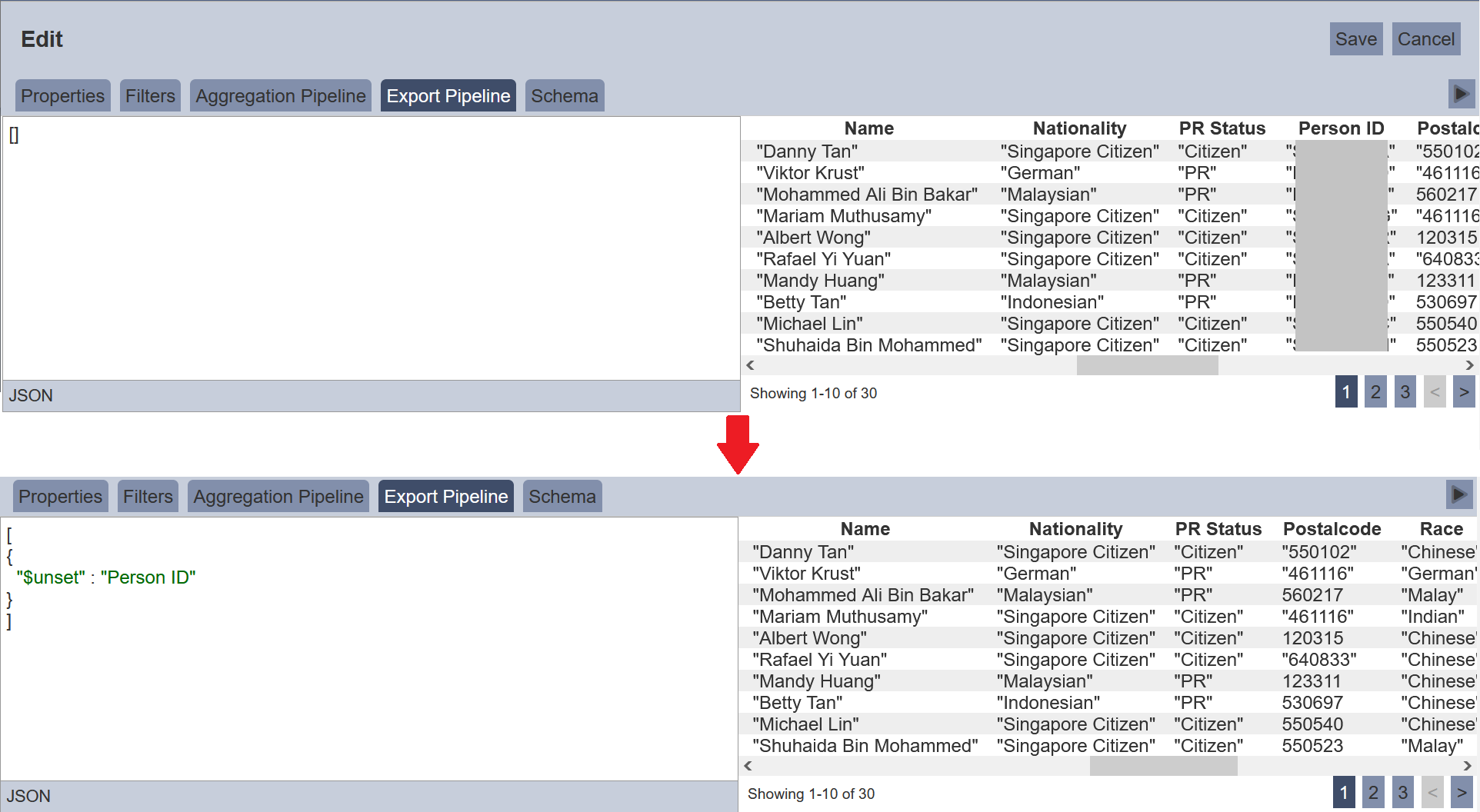

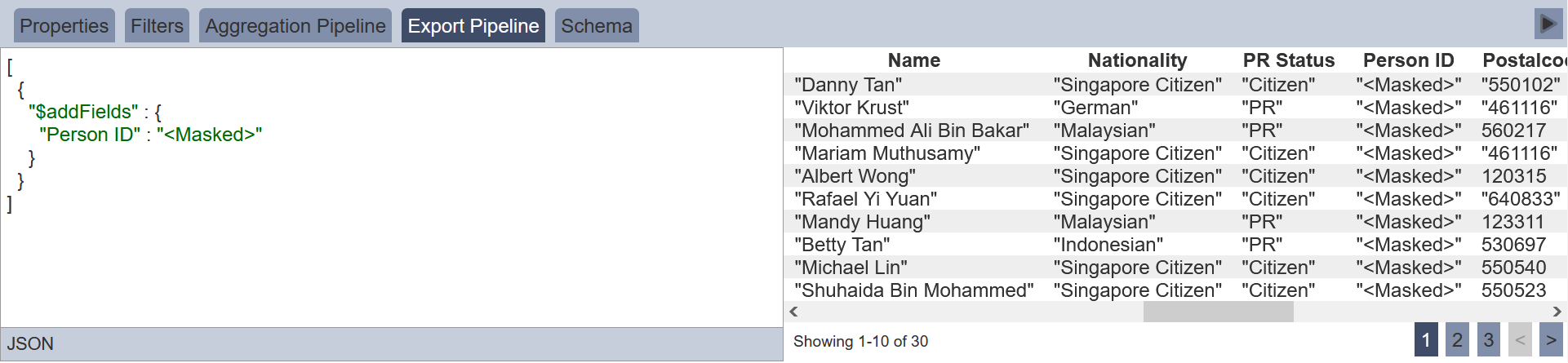

Export Pipeline

Export Pipeline allows you to manage the data to be exported. For example, some data may includes sensitive information, such as NRIC. You can use the Export Pipeline to hide the NRIC information before exporting the data or any data that you do not wish to export out.

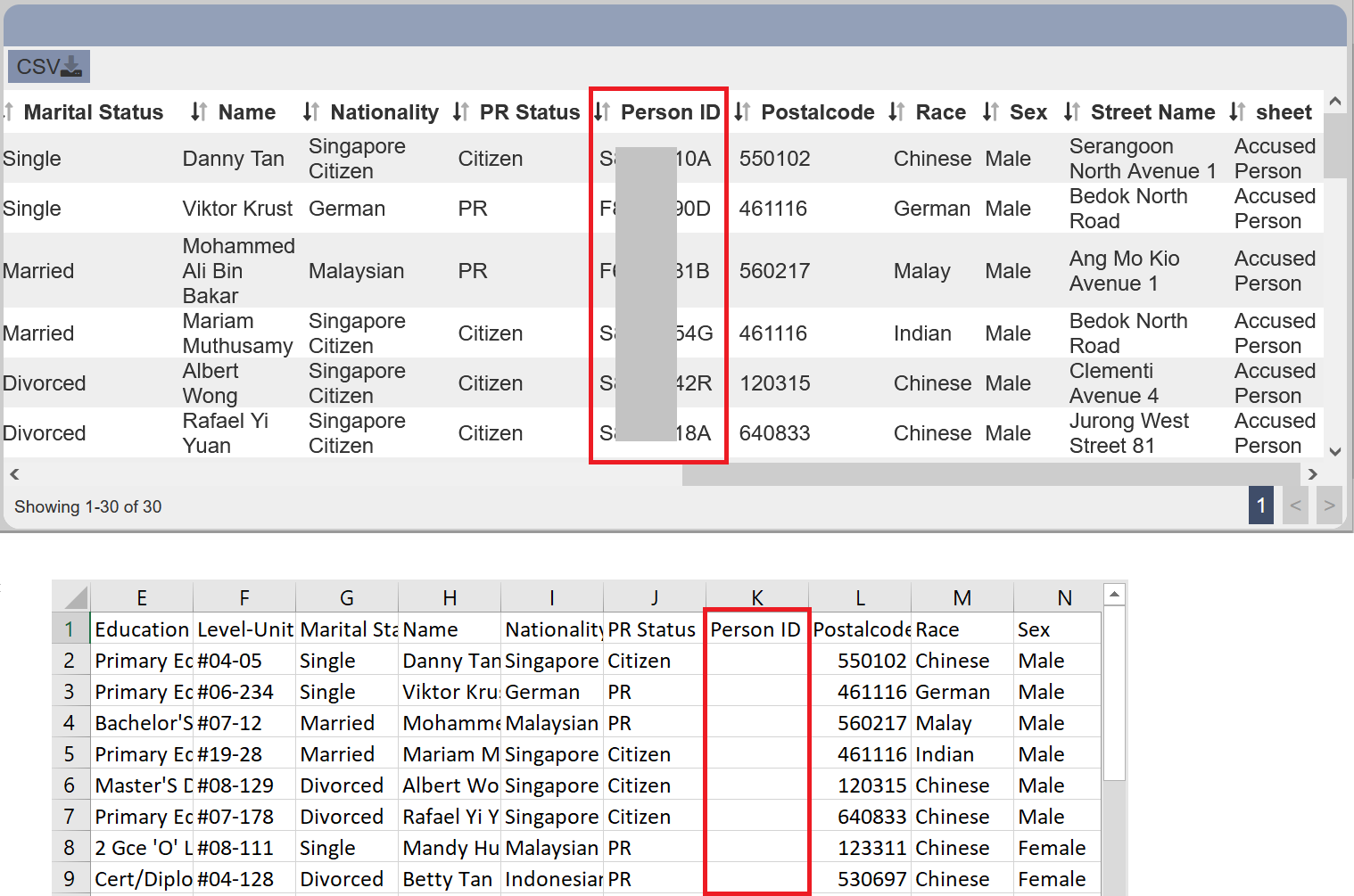

In the example below, the column “Person ID” which contains the NRIC,need to be hidden before exporting the data. In the Export Pipeline tab, enter the code to hide the “Person ID” column. Click on the “Test Aggregation” button at the right on the page, under the “Cancel” button. You will see that the “Person ID” column is removed.

In the Dashboard page, the linear table will display all columns. When exporting the data, an empty “Person ID” column will appear.

You can also use masking to mask the data, either totally or partially.

The Aggregation Pipeline will run first before the Export Pipeline steps are run, thus there is no need to duplicate any aggregation steps in the Export Pipeline.

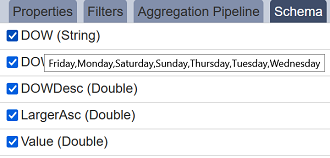

Schema Tab

Infer schema shows all the fields in the dataset based on the filter and/or aggregation, except fields start with "_“ by default, for example, ”_id“ and ”_metadata“ (used by the Imports module). There are options to include these fields by clicking the ”Expand“ button (Show Intervals) and invert selection in the dataset by clicking the ”Invert Selection" at the top right of the panel. There is a warning if you attempt to save the dataset setup without inferring the schema. Also, after changing the Aggregation Pipeline, the schema may change and it should be inferred again.

You can hover over the fields to see enumerated values if there are less than X values (where X is defined in config as ambience.datasets.choices.limit = 100). For example “Monday”, “Tuesday”, etc. if you hover over DayOfWeek (DOW) field,

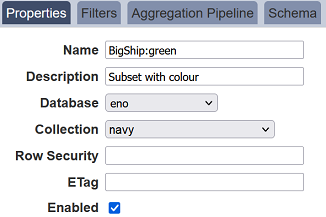

Subset Dataset

Datasets can be broken down into subset with different filter criteria and/or aggregation pipeline by using a colon to distinguish a subset from a dataset, for example, BigShip:green where green is the subset of the BigShip dataset. If the BigShip dataset does not exist, the BigShip:green becomes a dataset instead of a subset.